Summary: I look at averages for hitters and pitchers in the postseason to see how their quality (relative to league average) has changed over time. Unsurprisingly, the gap between postseason and regular season average pitchers is larger than the comparable gap for hitters. The trend over time for pitchers is expected, with a decrease in quality relative to league average from the 1900s to mid-1970s and a slight increase since then that appears to be linked with the increased usage of relievers. The trend for hitters is more confusing, with a dip from 1950 to approximately 1985 and an increase since then. Overall, however, the average quality of both batters and pitchers in the postseason relative to league average is as high as it has been in the expansion era.

Quality of play in the postseason is a common trope of baseball discussion. Between concerns about optics (you want casual fans to watch high quality baseball) and rewarding the best teams, there was a certain amount of handwringing about the number of teams with comparatively poor records into the playoffs (e.g., the Giants and Royals made up the only pair of World Series teams ever without a 90 game winner). This prompted me to wonder about the quality of the average players in the postseason and how that’s changed over time with the many changes in the game—increased competitive balance, different workloads for pitchers, changes in the run environment, etc.

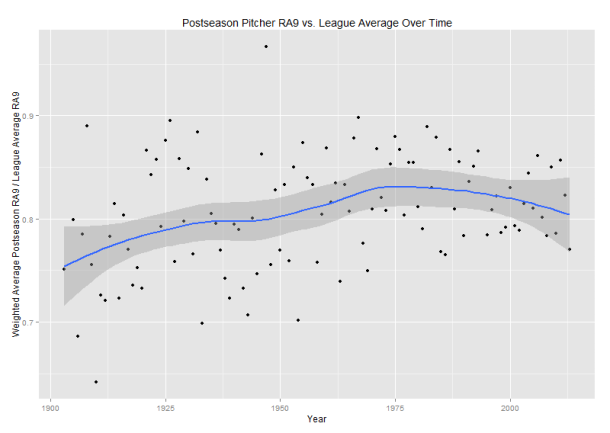

For pitchers, I looked at weighted league-adjusted RA9, which I computed as follows:

- For each pitcher in the postseason, compute their Runs Allowed per 9 IP during the regular season. Lower is better, obviously.

- Take the average for each pitcher, weighted by the number of batters faced.

- Divide that average by the major league average RA9 that year.

You can think of this as the expected result you would get if you chose a random plate appearance during the playoffs and looked at the pitcher’s RA9. Four caveats here:

- By using RA9, this is a combined pitching/defense metric that really measures how much the average playoff team is suppressing runs relative to league average.

- This doesn’t adjust for park factors, largely because I thought that adjustment was more trouble than it was worth. I’m pretty sure the only effect that this has on aggregate is injecting some noise, though I’m not positive.

- I considered using projected RA9 instead of actual RA9, but after playing around with the historical Marcel projections at Baseball Heat Maps, I didn’t see any meaningful differences on aggregate.

- For simplicity’s sake, I used major league average rather than individual league average, which could influence some of the numbers in the pre-interleague play era.

When I plot that number over time, I get the following graph. The black dots are observed values, and the ugly blue line is a smoothed rolling estimate (using LOESS). (The gray is the confidence interval for the LOESS estimate.)

While I wouldn’t put too much weight in the LOESS estimate (these numbers should be subject to a large bit of randomness), it’s pretty easy to come up with a basic explanation of why the curve looks the way it does. For the first seventy years of that chart, the top pitchers pitched ever smaller shares of the overall innings (except for an uptick in the 1960s), ceding those innings to lesser starters and dropping the average quality. However, starting in the 1970s, relievers have covered larger portions of innings (covered in this FiveThirtyEight piece), and since relievers are typically more effective on a rate basis than starters, that’s a reasonable explanation for the shape of the overall pitcher trend.

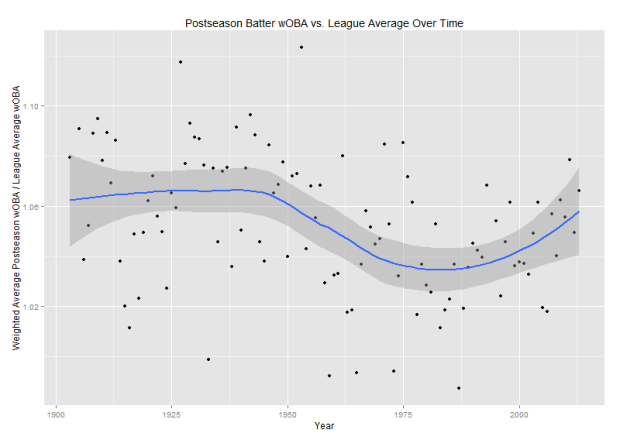

What about hitters? I did the same calculations for them, using wOBA instead of RA9 and excluding pitchers from both postseason and league average calculations. (Specifically, I used the static version of wOBA that doesn’t have different coefficients each year. The coefficients used are the ones in The Book.) Again, this includes no park adjustments and rolls the two leagues together for the league average calculation. Here’s what the chart looks like:

Now, for this one I have no good explanation for the trend curve. There’s a dip in batter quality starting around integration and a recovery starting around 1985. If you have ideas about why this might be happening, leave them in the comments or Twitter. (It’s also quite possible that the LOESS estimate is picking up something that isn’t really there.)

What’s the upshot of all of this? This is an exploratory post, so there’s no major underlying point, but from the plots I’m inclined to conclude that, relative to average, the quality of the typical player (both batter and pitcher) in the playoffs is as good as it’s been since expansion. (To be clear, this mostly refers to the 8 team playoff era of 1995–2011; the last few years aren’t enough to conclude anything about letting two more wild cards in for a single game.) I suspect a reason for that is that, while the looser postseason restrictions have made it easier for flawed teams to make it in the playoffs, they’ve also made it harder for very good teams to be excluded because of bad luck, which lifts the overall quality, a point raised in this recent Baseball Prospectus article by Sam Miller.

Two miscellaneous tidbits from the preparation of this article:

- I used data from the Lahman database and Fangraphs for this article, which means there may be slight inconsistencies. For instance, there’s apparently an error in Lahman’s accounting for HBP in postseason games the last 5 years or so, which should have a negligible but non-zero effect on the results.

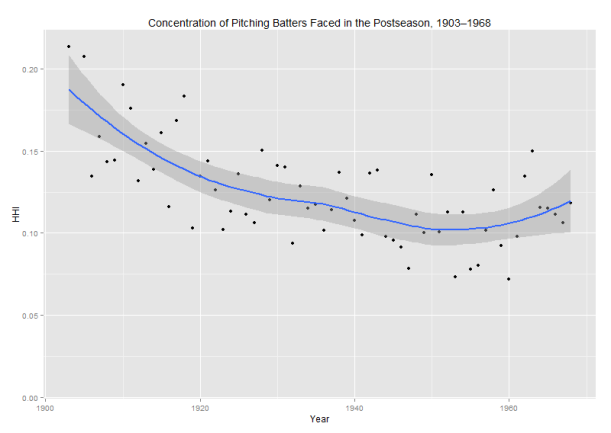

- I mentioned that the share of batters faced in the postseason by the top pitchers has decreased steadily over time. I assessed that using the Herfindahl-Hirschman index (which I also used in an old post about pitchers’ repertoires.) The chart of the HHI for batters faced is included below. I cut the chart off at 1968 to exclude the divisional play era, which by doubling the number of teams decreased the level of concentration substantially.